Secrets of Machine Learning in 2025

Stepping into 2025, the machine learning landscape has morphed into something I could only dream of a decade ago. As an ML engineer, I've had front‑row seats to breakthroughs that have rewritten our playbook—but only a few insiders really get the low‑down. So here’s my behind‑the‑curtain take on the true secrets powering ML today.

1. Frugal Machine Learning: Doing More with Less

One of the biggest shifts is the rise of Frugal Machine Learning (FML)—the art of building models that are energy-efficient, data-light, and cost-effective. We’re training and deploying models using minimal compute and data, perfect for edge and IoT scenarios where resources are tight. Techniques like model compression, knowledge distillation, and dynamic architecture optimization have become staples in my toolkit. Why it matters: It’s not just eco-conscious—it’s pragmatically powerful. Less waste, faster iteration, and accessibility to lean teams

2. Multimodal & Neuro‑Symbolic AI: Smarter, More Human‑Like Reasoning

We no longer silo AI by data type. In 2025, multimodal ML systems routinely fuse text, images, speech, and more into one brain-like model—improving everything from healthcare diagnoses to autonomous navigation. On top of that, neuro-symbolic AI is having a moment. By combining neural nets with symbolic logic, these systems can “think” and reason more reliably—and dramatically reduce hallucinations, especially in complex processes like warehouse logistics or shopping assistants. What I love: My models now intuit patterns and articulate explanations—like a colleague, not just a black box.

3. Edge AI & Federated Learning: Privacy, Speed, Autonomy

Cloud-first is now passé. Edge ML has taken over for applications demanding real-time responses and data sovereignty—think wearables diagnosing health patterns or robots reacting in milliseconds. Parallel to this, federated learning lets us train models across distributed devices without centralizing data—bolstering privacy and compliance in sectors like healthcare and finance. My secret edge: I prototype and deploy AI that stays local, stays private—and stays fast.

4. Explainable, Ethical, and Responsible AI (XAI & eAI)

With AI embedded in high-stakes areas like finance, health, and justice, deploying opaque models is no longer acceptable. Explainable AI (XAI) and Ethical AI (eAI) are now baked into every stage—from dataset design to inference architecture. Models must show why they decided what they did. On the ground: I'm often tweaking models to output decision paths, bias scores, or even legal summaries—just so departments can trust and verify what the AI says.

5. Zero‑Data & Self‑Supervised Learning: Training Without Labels

Labels are expensive and limiting—so we’re using self‑supervised and zero‐data learning methods to train on unlabeled data, or even generalize across tasks without direct examples. This is especially common in startups or R&D labs with limited datasets. Result? AI that sees trends, adapts itself, and performs impressively with minimal supervision.

6. Quantum Machine Learning: A New Dimension

It’s not all classical computing anymore. Quantum ML, particularly techniques like Quantum Kernel‑Aligned Regressors (QKAR), are emerging as game-changers—especially for complex fields like chip design, where they’ve shown up to 20% efficiency gains. We’re also starting to explore Explainable QML, blending quantum with explainability—another step toward trustable, high‑power ML.

7. Agentic AI: Autonomous, Collaborative Systems

Picture AI agents that handle tasks end-to-end—without being micromanaged. These “agentic AI” systems are autonomous, goal‑oriented, and self‑adapting, powering everything from enterprise workflows to home robotics. The secret sauce: You orchestrate dozens of agents—and they self-organize, learn, and optimize, while you stay focused on strategy.

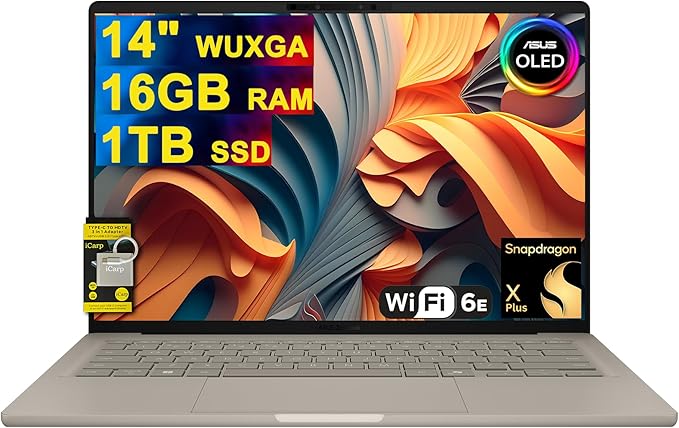

Gear That Powers the Secret Sauce

To run all this, you need serious hardware—think high‑graphics laptops with robust GPUs, high‑rate memory, and thermal handling for intense model tuning and prototyping. (Affiliate spot: link your picks here.) Ideal specs include: NVIDIA RTX 40‑series (e.g., RTX 4080 or better) At least 32 GB RAM (64 GB preferred for large models) Fast SSD (2 TB+ for datasets and virtual environments) Efficient cooling and battery management for coding marathons

8. Quantum Hybrid & Data‑Centric Innovations

Much of the AI frontier now blends quantum, synthetic, and classical ML methods. From synthetic data for training to causal inference deep in ML pipelines, we’re building smarter, context-aware systems—without relying solely on brute‑force data.